Overview

This article delves into the critical aspect of mastering latency in speech to elevate AI voice performance. By emphasizing the necessity of minimizing response delays, it sets the stage for effective communication. Various factors contributing to latency, such as network delays and processing times, are outlined, providing a comprehensive understanding of the issue.

Furthermore, the article presents actionable strategies to reduce latency, ultimately striving for a seamless and engaging user experience in voice applications. By addressing these key elements, the article not only informs but also empowers readers to enhance their voice application strategies.

Introduction

Latency in speech recognition transcends mere technical challenges; it is a pivotal element that significantly influences user experiences and interactions with AI voice applications. As technology continues to advance, pushing the limits of possibility, comprehending and mastering latency becomes imperative for developers and businesses alike.

What strategies can be employed not only to minimize delay but also to elevate the overall performance of voice systems? This article meticulously examines the intricacies of latency in speech recognition, investigates its ramifications, identifies sources of delay, and presents actionable solutions to foster seamless, engaging interactions.

Define Latency in Speech Recognition

Latency speech in recognition is defined as the time delay between a person's spoken input and the system's response. This delay, quantified in milliseconds (ms), can significantly impact latency speech for individuals; excessive wait times often lead to uncomfortable pauses that interrupt conversational flow. For optimal interaction, a latency speech of under 500 ms is ideal, as it fosters a more natural dialogue.

In 2025, advancements in latency speech AI are anticipated to further decrease average delay, thereby improving responsiveness and customer satisfaction, as highlighted in several industry predictions. Businesses that emphasize minimal latency speech in their voice applications can greatly enhance engagement; according to Telnyx, each millisecond saved in voice AI performance boosts user satisfaction and engagement.

Real-world testing has demonstrated that reducing latency speech is essential for effective communication, particularly in applications like virtual assistants and customer service voicebots, where maintaining a seamless conversation is crucial. However, it is important to acknowledge that in situations requiring long alphanumeric inputs, low response time can sometimes lead to customer frustration if the system misinterprets pauses as the end of input, as highlighted in the case study on enhancing response time in voicebots.

Identify Sources of Latency in AI Voice Agents

Several factors significantly contribute to latency speech in AI voice agents, which impacts user experience and interaction quality.

-

Network Latency is a primary concern. Delays caused by latency speech during data transmission over the internet can severely affect response times, particularly in cloud-based systems. For optimal user experience, response durations should ideally remain under 1000ms; exceeding this threshold can disrupt the flow of interaction and detract from user satisfaction.

-

Processing Delays also play a crucial role. The time taken by the AI model to analyze and interpret speech results in latency speech. This encompasses the complexity of the algorithms employed, which, while enhancing accuracy, may inadvertently slow down response times. Techniques such as parallel processing and model optimization are essential strategies to mitigate these delays.

-

Hardware Limitations cannot be overlooked. The performance of the hardware executing the AI voice agent directly influences processing speed. Older or less powerful devices may struggle to meet real-time demands. Utilizing specialized hardware, such as GPUs or TPUs, can significantly enhance processing capabilities and reduce delays.

-

The Speech Recognition Model selected is equally critical. More sophisticated models may yield improved accuracy but can also lead to increased latency speech. Striking a balance between speed and precision is vital for maintaining effective interactions.

-

Lastly, the User Environment plays a pivotal role. Background noise and the quality of the microphone can hinder the speed and accuracy of speech recognition, leading to potential delays. Ensuring high-quality audio input is paramount for minimizing processing time.

Addressing these delay factors is essential for enhancing latency speech performance in AI, ensuring that interactions remain seamless and engaging for users. As emphasized by industry specialists, reducing delays is fundamental to fostering client trust and satisfaction.

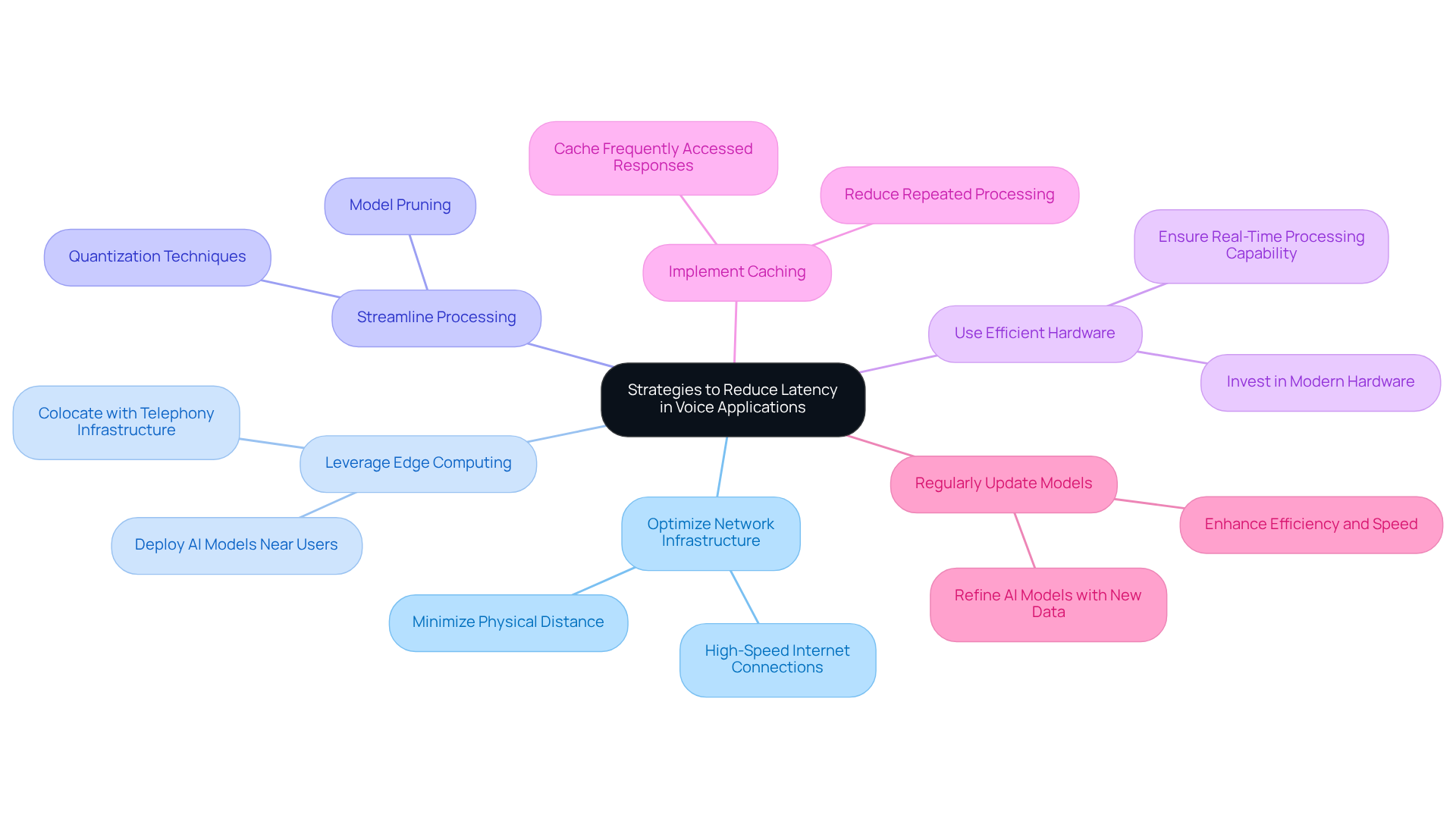

Implement Strategies to Reduce Latency in Voice Applications

To effectively reduce latency in voice applications, several strategies should be prioritized:

-

Optimize Network Infrastructure: Implement high-speed internet connections and minimize the physical distance between users and servers. This method significantly diminishes network latency, ensuring faster response intervals. As Ian Reither states, 'Latency speech is the silent killer of Voice AI.' In latency speech, every millisecond counts.

-

Leverage Edge Computing: Deploy AI models nearer to the individual through edge computing. This strategy reduces data travel duration, which results in lower latency speech, leading to enhanced response rates and a more seamless user experience. By colocating AI models with telephony infrastructure, companies can achieve sub-200ms round-trip durations, as demonstrated by Telnyx.

-

Streamline Processing: Simplify the speech recognition model where feasible, striking a balance between accuracy and speed. Techniques such as model pruning and quantization can enhance processing efficiency without sacrificing performance.

-

Use Efficient Hardware: Invest in modern hardware capable of handling the demands of real-time processing. This ensures that AI voice agents operate smoothly and responsively.

-

Implement Caching: Utilize caching for frequently accessed responses or data. This decreases the necessity for repeated processing, thus speeding up response rates and improving user satisfaction. Research indicates that companies adopting sub-300ms delay optimization have reported a reduction in operational expenses by as much as 15%.

-

Regularly Update Models: Continuously refine and update AI models with new data. This practice not only enhances their efficiency but also assists in decreasing processing durations, keeping the agents nimble and effective.

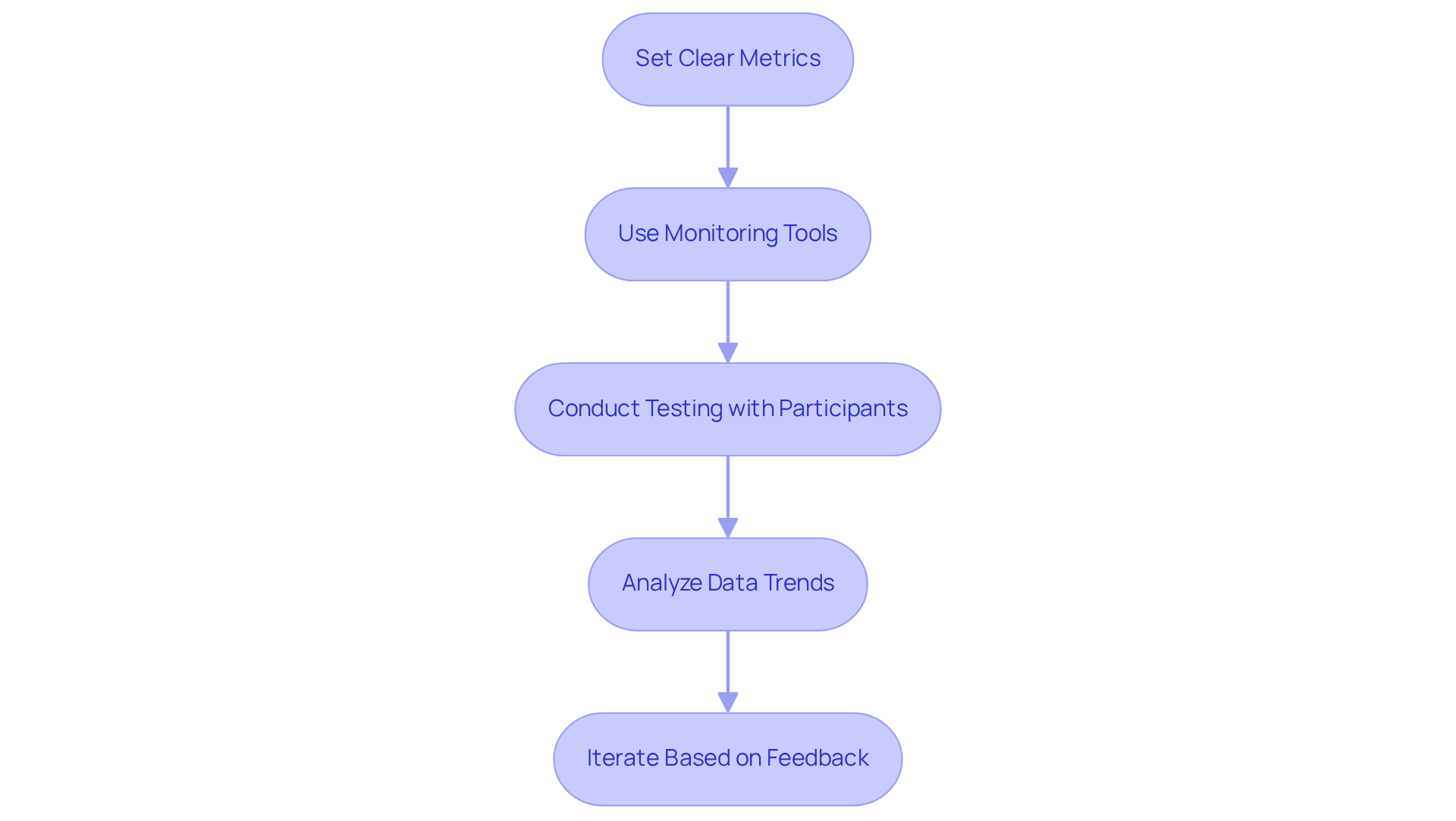

Measure and Analyze Latency for Continuous Improvement

To ensure continuous improvement in latency, implement the following measurement and analysis techniques:

-

Set Clear Metrics: Define key performance indicators (KPIs) such as average response time, user satisfaction scores, and first-call resolution rates to effectively track latency. Aim for an average response time under 1000 ms for latency speech, as this is considered optimal for AI agents, ensuring a natural interaction experience. Intone’s AI voice agents are crafted to ensure latency speech with sub-500ms response times, guaranteeing a smooth and human-like conversation flow even during peak volumes.

-

Use Monitoring Tools: Employ real-time analytics tools that deliver insights on latency and overall performance. These tools facilitate swift recognition of issues, enabling teams to react proactively and sustain optimal experiences for individuals.

-

Conduct Testing with Participants: Regularly assess the AI speech agent with actual individuals to collect feedback on performance. This practical method assists in pinpointing particular areas for enhancement and guarantees that the system fulfills client expectations, particularly the latency speech, which requires an optimal response duration of 300-500 ms for natural interactions. Intone’s real-time voice stack supports this by ensuring agents can respond promptly, thereby improving latency speech and enhancing customer satisfaction.

-

Analyze Data Trends: Review latency speech data over time to uncover patterns and correlations with user experience. Understanding these trends can help pinpoint specific areas needing attention, facilitating targeted optimizations to reduce latency speech. For instance, the deployment of the AI voicebot at Hawesko demonstrated how minimal latency speech can address up to 80% of interactions without human involvement, a remarkable achievement backed by Intone’s strong infrastructure.

-

Iterate Based on Feedback: Utilize insights gained from analysis to make iterative enhancements to the latency speech AI agent. This ongoing process ensures that latency is continually optimized, enhancing the overall effectiveness of the voice technology, particularly with Intone’s commitment to maintaining 99.9% uptime.

Conclusion

Mastering latency in speech recognition is crucial for enhancing AI voice performance. Minimizing delays between spoken input and system response is vital, as it significantly affects user experience. By addressing latency, businesses can foster more natural interactions and improve overall satisfaction.

Key arguments highlight various sources of latency, including:

- Network issues

- Processing delays

- Hardware limitations

- Environmental factors

Strategies such as optimizing network infrastructure, leveraging edge computing, and utilizing efficient hardware are essential for reducing latency. Ongoing measurement and analysis are critical for continuous improvement, ensuring that AI voice agents perform optimally and meet user expectations.

Ultimately, reducing latency in voice applications transcends a mere technical challenge; it is a vital aspect of delivering superior user experiences. As the industry evolves, embracing these strategies will be key to staying competitive and ensuring that AI voice technologies remain responsive and engaging. The future of AI voice performance hinges on a steadfast commitment to minimizing latency, making it a priority for developers and businesses alike.

Frequently Asked Questions

What is latency in speech recognition?

Latency in speech recognition is the time delay between a person's spoken input and the system's response, measured in milliseconds (ms).

Why is latency important in speech recognition?

Latency is important because excessive wait times can lead to uncomfortable pauses that interrupt the flow of conversation, negatively impacting user experience.

What is the ideal latency for optimal interaction in speech recognition?

An ideal latency for optimal interaction is under 500 ms, as it fosters a more natural dialogue.

How is latency in speech recognition expected to change by 2025?

By 2025, advancements in latency speech AI are anticipated to further decrease average delays, improving responsiveness and customer satisfaction.

How does reducing latency affect user satisfaction and engagement?

Reducing latency boosts user satisfaction and engagement; according to Telnyx, each millisecond saved in voice AI performance enhances user experience.

In what applications is low latency particularly crucial?

Low latency is particularly crucial in applications like virtual assistants and customer service voicebots, where maintaining a seamless conversation is essential.

What issues can arise from low response times in situations requiring long inputs?

In situations requiring long alphanumeric inputs, low response times can lead to customer frustration if the system misinterprets pauses as the end of input.